The most beautiful thing you can experience is mysterious - Einstein

Tldr: software tools and libraries that leverage Data-Centric computing can be used to accelerate scientific discovery.

One area that caught my attention was Compute driven scientific discovery. So i have been trying to understand from first principles what role computing/AI will play in scientific discovery in coming years. Starting from a blank canvas, i thought it ’d be best to write down some stuff and possibly see if a picture emerges and if we can also find some common ground for projects and develop a vision statement for atreides research lab. Here are some long, random and incomplete thoughts starting from very basics:

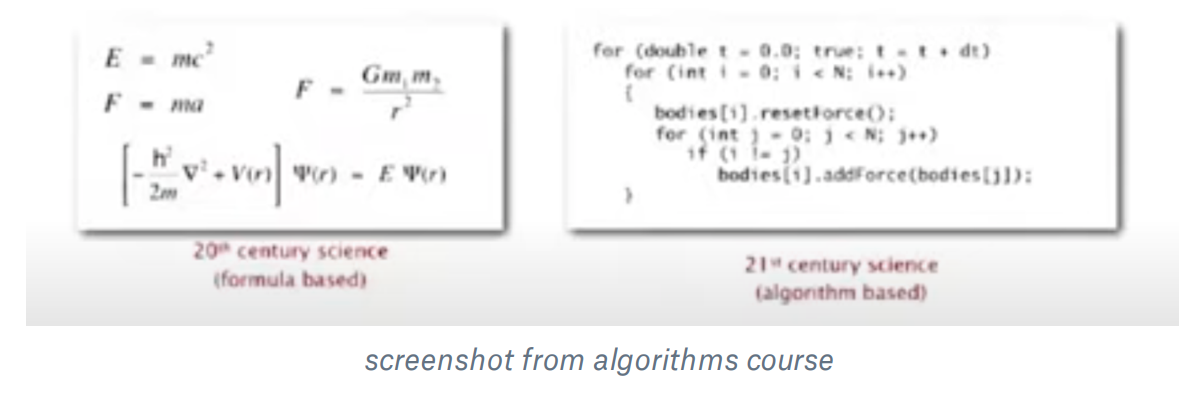

Folks with a CS background are trained in algorithmic thinking where we learn to thinking about solving problems using computation. Algorithms are the fundamental unit of programming and computer science. But increasingly in terms of applications they go beyond software development as well. Avi Higderson says “Algorithms are a common language for nature, human, and computer.” Even though the field of CS is new and increasingly we are starting to realise that algorithmic thinking is a universal framework that can be applied to hard sciences as well. Robert Sedgewick who teaches algorithms at Princeton says computational models are replacing math models in scientific inquiry/discovery:

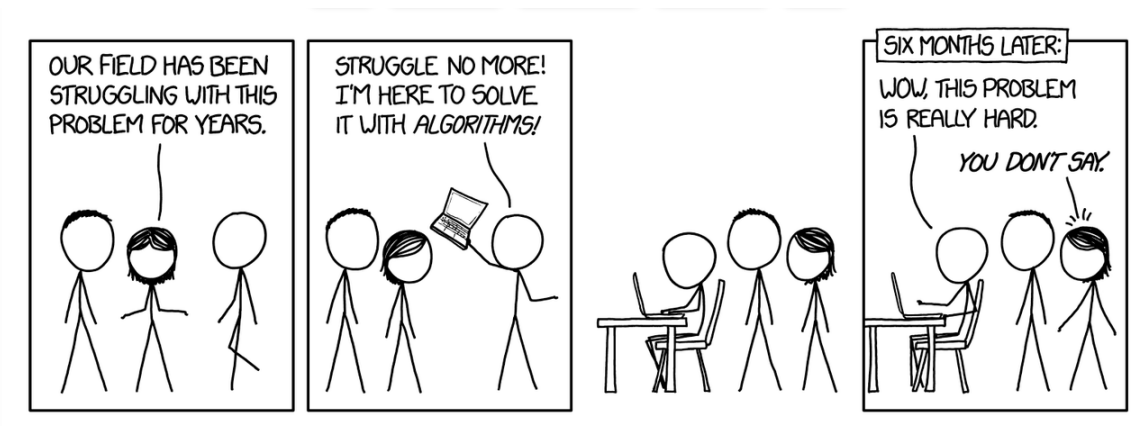

This means algorithms are a common language for understanding nature where we can simulate the given phenomena in order to better understand it. This attitude however, does have its limitations:

Data centric computing and Data Science computer science has evolved as a discipline. Its interesting to see how MIT’s intro to computation course has changed its structure overtime with emphasis on technical topics for Data analysis as well. AI Software is replacing traditional software Some dub the phenomena as software 2.0. I like the term data-centric computing for explaining this phenomena.

Data science is the process of formulating a quantitative question that can be answered with data, collecting and cleaning the data, analyzing the data, and communicating the answer to the question to a relevant audience. If we do want a concise definition, the following seems to be reasonable: Data science is the application of computational and statistical techniques to address or gain insight into some problem in the real world.

The key phrases of importance here are “computational” (data science typically involves some sort of algorithmic methods written in code), “statistical” (statistical inference lets us build the predictions that we make), and “real world” (we are talking about deriving insight not into some artificial process, but into some “truth” in the real world).

Another way of looking at it, in some sense, is that data science is simply the union of the various techniques that are required to accomplish the above. That is, something like:

Data science = statistics + data collection + data preprocessing + machine learning + visualisation + business insights + scientific hypotheses + big data + (etc)

This definition is also useful, mainly because it emphasizes that all these areas are crucial to obtaining the goals of data science. In fact, in some sense data science is best defined in terms of what it is not, namely, that is it not (just) any one of these subjects above.

Data centric = data science + data structures

Data science = statistics + data collection + data preprocessing + machine learning + visualisation + business insights + scientific hypotheses + big data + (etc)

Data centric computing is characterised by programs interrogate data: that is, programs are tools for answering questions. read more

AI as an enabler of scientific discovery - Case Studies

In the mid-twentieth century, Margaret Oakley Dayhoff pioneered the analysis of protein sequencing data, a forerunner of genome sequencing, leading early research that used computers to analyse patterns in the sequences. The expression ‘artificial intelligence’ today is therefore an umbrella term. It refers to a suite of technologies that can perform complex tasks when acting in conditions of uncertainty, including visual perception, speech recognition, natural language processing, reasoning, learning from data, and a range of optimisation problems.

Using genomic data to predict protein structures: Understanding a protein’s shape is key to understanding the role it plays in the body. By predicting these shapes, scientists can identify proteins that play a role in diseases, improving diagnosis and helping develop new treatments. The process of determining protein structures is both technically difficult and labour-intensive, yielding approximately 100,000 known structures to date5. While advances in genetics in recent decades have provided rich datasets of DNA sequences, determining the shape of a protein from its corresponding genetic sequence – the protein-folding challenge – is a complex task. To help understand this process, researchers are developing machine learning approaches that can predict the threedimensional structure of proteins from DNA sequences. The AlphaFold project at DeepMind, for example, has created a deep neural network that predicts the distances between pairs of amino acids and the angles between their bonds, and in so doing produces a highly-accurate prediction of an overall protein structure.

Understanding complex organic chemistry The goal of this pilot project between the John Innes Centre and The Alan Turing Institute is to investigate possibilities for machine learning in modelling and predicting the process of triterpene biosynthesis in plants. Triterpenes are complex molecules which form a large and important class of plant natural products, with diverse commercial applications across the health, agriculture and industrial sectors. The triterpenes are all synthesized from a single common substrate which can then be further modified by tailoring enzymes to give over 20,000 structurally diverse triterpenes. Recent machine learning models have shown promise at predicting the outcomes of organic chemical reactions. Successful prediction based on sequence will require both a deep understanding of the biosynthetic pathways that produce triterpenes, as well as novel machine learning methodology

• Finding patterns in astronomical data: Driving scientific discovery from particle physics experiments and large scale astronomical data • Understanding the effects of climate change on cities and regions: Satellite imaging to support conservation

The ‘traditional’ way to apply data science methods is to start from a large data set, and then apply machine learning methods to try to discover patterns that are hidden in the data – without taking into account anything about where the data came from, or current knowledge of the system. But might it be possible to incorporate existing scientific knowledge (for example, in the form of a statistical ‘prior’) so that the discovery process is constrained, in order to produce results which respect what researchers already know about the system. For example, if trying to detect the 3D shape of a protein from image data, could chemical knowledge of how proteins fold be incorporated in the analysis, in order to guide the search? the goal of scientific discovery is to understand. Researchers want to know not just what the answer is but why. Are there ways of using AI algorithms that will provide such explanations? In what ways might AI-enabled analysis and hypothesis-led research sit alongside each other in future? How might people work with AI to solve scientific mysteries in the years to come? Is it possible that one day, computational methods will not only discover patterns and unusual events in data, but have enough domain knowledge built in that they can themselves make new scientific breakthroughs? Could they come up with new theories that revolutionise our understanding, and devise novel experiments to test them out? Could they even decide for themselves what the worthwhile scientific questions are? And worthwhile to whom?

more:

https://www.nature.com/articles/d41586-021-02762-6 https://ai4sciencecommunity.github.io/neurips22.html https://www.csiro.au/en/research/technology-space/ai/artificial-intelligencefor-science-report https://royalsociety.org/-/media/policy/projects/ai-and-society/AI-revolution-inscience.pdf?la=en-GB&hash=5240F21B56364A00053538A0BC29FF5F

Role of Software:

developing software that makes their day to day life easier. E.g https://github.com/collections/software-in-science b. Data engineering related work for example building d

Julia:

Julia is a general-purpose interpreted language developed by a group at MIT, with numerical computing at the core of its design. It was first released in 2012 and slowly has matured to a point where version 1.0 was launched in 2018 (currently at v1.7). At its core, Julia is a high-level, dynamic programming language that offers performance approaching that of statically-typed languages like C++ and is increasingly being used by a huge of number universities and tech companies.

Performance: Julia, just like python is a dynamic language with optional types. In python types can change at run-time, costing an overhead as compared to C, which is statically typed. However, Julia uses multiple-dispatch, which makes defining types optional, so defining types doesn’t lead to that performance boost.

Some bragging rights highlighted by MIT at v1.0 launch:

Julia is the only high-level dynamic programming language in the “petaflop club,” having achieved 1.5 petaflop/s using 1.3 million threads, 650,000 cores and 9,300 Knights Landing (KNL) nodes to catalogue 188 million stars, galaxies, and other astronomical objects in 14.6 minutes on the world’s sixth-most powerful supercomputer.

“The release of Julia 1.0 signals that Julia is now ready to change the technical world by combining the high-level productivity and ease of use of Python and R with the lightning-fast speed of C++,” Edelman says.

Rich data science and visualisation libraries:: From a data science perspective, anyone who is already pushing the boundaries of python and is interested in high-performance numerical computing should definitely give Julia a try. Developing high-performance libraries focusing on scientific computing and numerical computations have been prioritised since day 1. This means Julia solves the two language problem where it will allow you to quickly convert those prototypes into high-performance implementations without the extra overheads of shifting between two different languages. It is quite similar to python syntactically, plus you can use all your existing python libraries in it. You can also call C and Fortran libraries natively.

Big data and Spark Eco-System:

Physics:

There are a lot of cool advances in AI and physics. In my particular field of condensed matter physics, a number come to mind. One is trying to automatically extract synthesis recipes from the literature. Imagine that you want to see how people have synthesized a given solid state compound. Then searching through the literature can be painful. A great collaboration from MIT/Berkeley did this using NLP. I don't know what blood oaths they signed, but they were able to obtain a huge corpus of articles. But, how to know if an article contains a synthesis recipe? They set up their internal version of Mechanical Turk and had their students label a number of articles. Then they had to find the recipes, represent them as a DAG, etc. They have now incorporated the result with the Materials project (https://materialsproject.org/apps/synthesis/#).

There are groups that are using graph neural networks to understand statistical mechanics and microscopy. There are also a number of groups working on trying to automate synthesis (most of it is Gaussian process based, a handful of us are trying reinforcement learning--it's painful). On the theory side, there is work speeding up simulation efforts (ex. DFT functionals) as well as determining if models and experiment agree (Eun Ah Kim rocks!).

Outside of my field, there has been a push with Lagrangian/Hamiltonian NNs that is really cool in that you get interpretability for "free" when you encode physics into the structure of the network. Back to my field, Patrick Riley (Google) has played with this in the context of encoding symmetries in a material into the structure of NNs.

There are of course challenges. In some fields, there is a huge amount of data--in others, we have relatively small data, but rich models. There are questions on what are the correct representations to use. Not to mention the usual issues of trust/interpretability. There's also a question of talent given opportunities in industry.

TLDR: Using neural network to model physical systems as black boxes and then, later, using symbolic regression (genetic algorithm to find a formula that fits a function) on the model to make it explainable and improve its generalization capacities.

The system managed to reinvent Newton's second law and find a formula to predict the density of dark matter.

(note that symbolic regression is often said to improve explainability but that, left unchecked, it tends to produce huge unwieldy formulas full of magical constants)